Original article: ¿Por qué el principal centro científico de Chile sobre cambio climático quedó sin financiamiento? La versión de CR2 tras ser excluido

Climate Science Center Faces Funding Loss Despite Leadership and Being a ‘Highly Impressive’ Proposal

In a shocking decision for the scientific community, the Climate Science and Resilience Center (CR2) will lose its state funding effective March 1, 2026, after being excluded from the new allocation of National Interest Centers. According to an analysis released by its board, the CR2 — which received the third highest global scientific evaluation among 44 proposals — dropped to 16th place due to a rating from a National Panel, just shy of the cutoff for the last awarded project.

The core of the controversy lies in the disparity between assessments. While the International Panel praised the proposal as «high merit,» the National Panel rated it with a 4.17, just above the lower limit of the “Very Good” category. Ironically, the written justification from the panel — which the CR2 accessed — describes the project as “highly impressive” with an “outstanding team,” noting only “minor gaps.” “The argument from ANID to reject our proposal is that any score between 4.0 and 4.9 is valid, but that does not explain why a proposal deemed ‘highly impressive’ receives a score so close to the lower limit,” stated Roberto Rondanelli, director of the CR2.0 proposal.

The exit of CR2 means Chile will lose an interdisciplinary center solely dedicated to studying climate change and resilience, especially concerning a country vulnerable to fires, storms, and floods. The center’s board emphasizes that it does not question the quality of the awarded projects, but rather the lack of traceability in a decision that concentrates the majority of new centers in the Metropolitan Region while sidelining initiatives backed by excellent scientific merit.

For details on the evaluations, figures from the process, and a complete analysis of this ruling, we invite you to review the official publication from CR2 below.

National Interest Center Allocation: How Did CR2 Lose Its Funding?

By CR2 Board, Board of the CR2.0 Renewal Proposal

In October 2024, the National Agency for Research and Development (ANID) opened applications for a new funding instrument for National Interest Research and Development Centers of Excellence. These centers are part of a new type of the National Centers Plan from the Ministry of Science, Technology, Knowledge and Innovation, established by President Gabriel Boric’s government (MinCiencia, 2023), replacing the National Funds for Priority Areas (FONDAP). Through the FONDAP instrument, the state had funded 12 research centers, including CR2, in areas deemed a priority for the country, building capacity over 13 years of operation (2012-2025).

The Climate Science and Resilience Center (CR2) participated in the National Interest Centers competition with a renewal proposal advancing climate science, focusing on its societal impacts, building resilience, and generating scientific evidence for decision-making. On December 26, 2025, ANID announced the competition results, awarding 11 national interest centers — involving resources of approximately US $77 million for the next five years. Officially, although the assigned scores were not disclosed, the proposal presented by CR2 was ranked fifth on the waiting list, behind the 11 awarded proposals, and four other projects led by universities located outside the Metropolitan Region, which were prioritized for this reason.

The results imply that CR2 will lose ANID funding starting March 1, 2026. The news of the funding loss has caused significant concern within the national and international scientific community, among users of the databases and platforms developed at the center, as well as public officials, media outlets, and the general public.

This article explains the process that led to CR2’s loss of funding and the actions taken in response.

Evaluation of the New National Interest Centers: Two Scientific Stages and a National Expert Panel

Unlike the FONDAP instrument, this competition did not define priority areas for centers to address. Instead, each proposal had to justify the relevance of its research area, incorporating a National Expert Panel to evaluate this dimension among others.

The competition evaluation was structured in three stages:

- written proposal evaluation performed by scientific peers (weighted at 30%);

- evaluation by an International Panel of 14 scientists from various fields based on an interview with the leading team of each proposal (35%); and

- evaluation by a National Panel of 7 experts who, based on the same interview participated in alongside the international scientific panel and “considering the totality of evidence,” would formulate a final assessment according to the criteria established in the bases (35%).

It is essential to clarify that both the National Panel and the International Panel participated in the same interview and evaluated similar criteria established by the guidelines (albeit with different weights), including the scientific relevance of the proposal and its contribution to national interest and public policies.

Results of the Competition and the Role of the National Panel in the Selection of Awardees

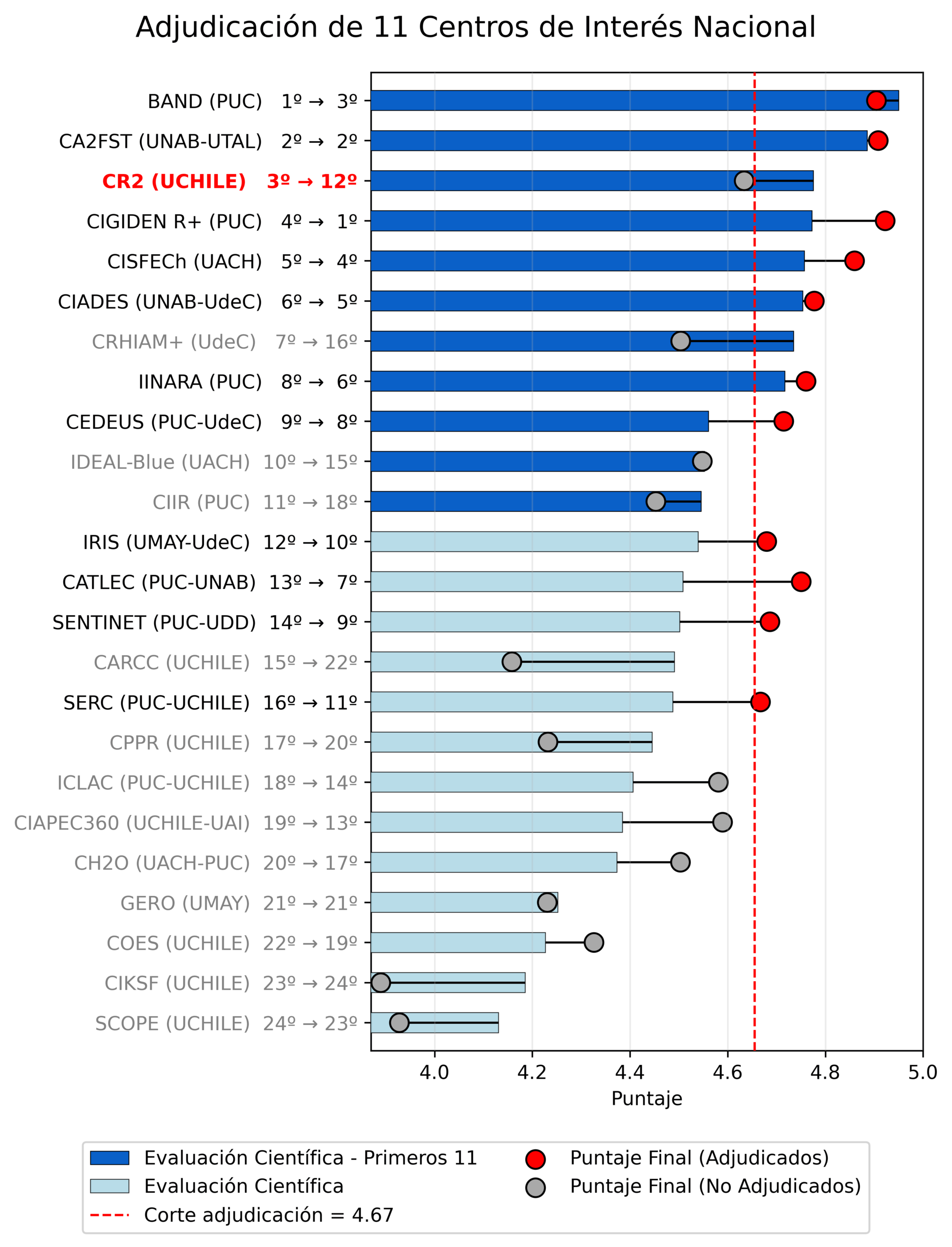

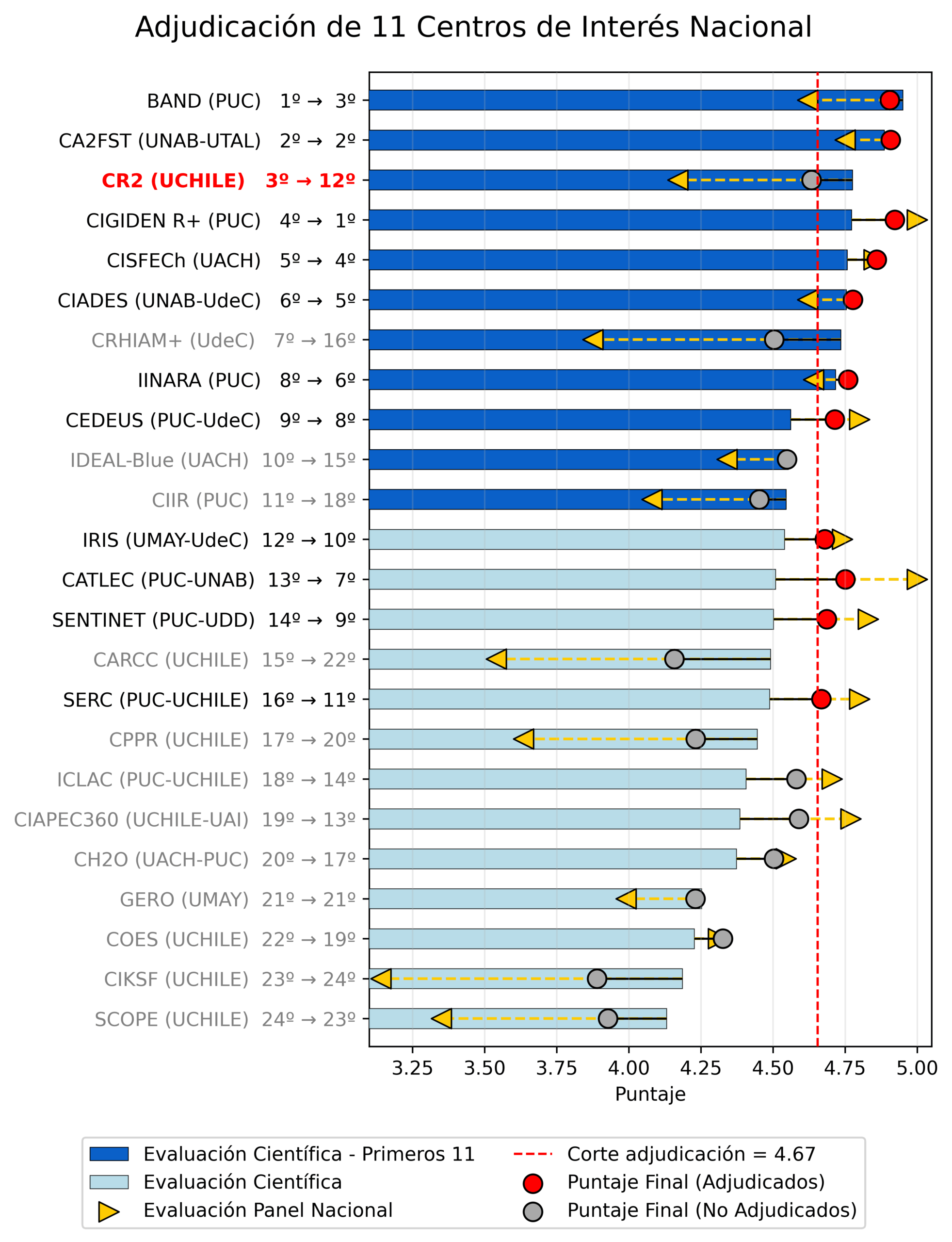

Figure 1 displays the results of the competition, specifying the scientific evaluation (stages 1 and 2) and the final score of each proposal obtained after the evaluation by the National Panel. These results reveal a pattern where the assessment from the National Panel significantly influences the relative positions of proposals concerning the award threshold.

Instead of observing a positive correlation between the final score and the scientific evaluation, with moderate and traceable adjustments, it appears that, for a certain set of proposals, the stage of the National Panel significantly altered their relative positions against the award threshold. The influence of the National Panel rearranges the prioritization of scientific evaluation instances, leaving some proposals below the cutoff score (movement left in the graph) and others above the cutoff score (movement right in the graph). We note the following points:

- A decisive drop for CR2 from third place to twelfth (and later to sixteenth). The center achieved outstanding scientific performance — of the 44 evaluated proposals, it secured the third-best evaluation globally and the second-best during the interviews with the International Panel — and yet, after the National Panel stage, it was positioned just below the cutoff score, within a distance of hundredths from the eleventh awarded project. Additionally, due to regionalization criteria not applied to the first 11 centers, the CR2 proposal was placed sixteenth, fifth on the waiting list.

- A similar pattern was observed in centers such as CRHIAM, IDEAL, and CIIR, which occupied promising positions in the first two stages of scientific evaluation but also fell below the threshold once the National Panel evaluation was incorporated.

- Positive relocations were noted for proposals that were not among the top eleven according to international scientific evaluations but crossed the award threshold after the National Panel evaluation. This movement does not question the quality of the awarded projects; rather, it highlights the critical role that the National Panel’s evaluation stage plays in defining the final results (for further details, see Figure 2).

Despite the National Panel being able, according to its powers, to apply its own criteria —different from those used in the previous stages assigning it 35% of the total score— the magnitude and direction of the adjustments observed require a clear and verifiable justification of the criteria and procedures used. To date, no such explanation has been provided.

[1] The criteria used in each evaluation stage and the composition of the national and international panels are available in the document “Annex No. 1 Evaluations” that we received as a result of the evaluation of our proposal and which we make available in this link.

Figure 1. Competition results. The blue and light blue bars show the results of the scientific evaluation (stages 1 and 2, corresponding to 65% of the total score). The black lines, accompanied by a red or gray dot, correspond to the Final Score (after the National Panel evaluation) for selected and non-selected centers, respectively. The scores from the National Panel that determine the final scores are detailed in Figure 2 at the end of this document. Each evaluation stage generates a score on a scale of 0 to 5, allowing decimal scores. For each proposal on the y-axis, the ranking according to its scientific evaluation and the final ranking after the National Panel evaluation is indicated. The dotted red line indicates the cutoff line defining the eleven centers awarded in the competition. The data corresponds to all awarded centers, all waiting-list centers (except for two), and some non-awarded centers. The information was obtained through transparency requests and/or provided by the project teams themselves.

Contradiction between the Qualitative Evaluation and the Numerical Score of the National Panel

It is entirely possible for the National Panel to arrive at a justified evaluation distinct from those in the previous stages. However, in a proper evaluation procedure, one would expect coherence between the qualitative judgments issued and the scores assigned by the panel itself. In the case of CR2, the justification provided by the National Panel —largely complimentary— is hardly compatible with the low numerical rating given of 4.17, which placed the center below the cutoff line:

(Our translation from the original English evaluation): CR2.0 presents a compelling and highly relevant research proposal, with a well-defined scientific framework, an outstanding team, and strong institutional backing. The proposal is particularly strong in addressing national climate challenges and linking to public policies while demonstrating a clear commitment to outreach and societal engagement. The integration of gender equality and inclusivity is another positive aspect of the proposal, although it could benefit from a higher level of detail.

The slight reduction in the score is due to some areas where the proposal could be more specific or more developed. These include: clarifying the mechanisms of interdisciplinary collaboration, improving the articulation of private sector participation, providing more specific strategies for long-term financial sustainability, and offering a more detailed gender equality action plan with quantifiable outcomes.

Despite these minor gaps, overall, the proposal is highly impressive and well aligned with national and global climate resilience needs. Therefore, the score reflects a project highly effective for addressing key scientific, public policy, and social challenges related to climate change.

We have been able to compare the evaluation of the National Panel of CR2 with those of other awarded centers, and we see that, in comparison, the written justification for CR2 is equal to or even more laudatory than that of some funded proposals. In that context, the numerical rating assigned —and the consequent non-award— become even more difficult to explain in terms of the criteria applied and traceability.

Current Status

Over a month after the competition results were disclosed, with the 11 National Interest Centers already awarded and in implementation phase, a fundamental question persists: why was CR2’s proposal not funded despite receiving excellent evaluations in the scientific stages and the high praise from the National Panel, which rated the proposal as “highly impressive”?

Following the announcement of the competition results and following the administrative pathway established in the competition guidelines, CR2 proposal director Roberto Rondanelli submitted an appeal to clarify these discrepancies. On Tuesday, February 10, 2026, ANID rejected this appeal. Regarding the discrepancy between the justification and the score assigned to CR2’s proposal by the National Panel, ANID’s response was as follows:

Regarding the differences stated by Mr. Rondanelli related to the application of the evaluation scale defined in the competition guidelines, contained in Resolution Affected No. 8 of 2024, it is necessary to point out that this scale is structured in categories from 0 to 5, such as Excellent (5.0) or Very Good (4.0–4.9), among others, which allow panels to weigh, within a range, both positive aspects and identified gaps in each criterion. The score awarded by evaluators and panels arises from the evaluation and detection of gaps detected by experts, who also consider strengths, observations, risks, and areas for improvement within the same criterion. In this sense, evaluative expressions such as “impressive,” “very effective,” or “minor gaps” do not impose a requirement to assign a 5.0, since the category of 4.0 to 4.9 of “Very Good” precisely admits high merit proposals that present minor areas for improvement justifying scores lower than excellence. Therefore, the report from the National Panel provided to the applicant consistently shows that, in each criterion, alongside positive elements, specific weaknesses are identified that prevent achieving the “Excellent (5.0)” category. The score assigned falls within the permitted range by the scale and reflects the existence of strengths and aspects to improve, mirroring the analysis and evaluation process of experts and panels, without implying contradiction or incoherence as Mr. Rondanelli claims.

Each reader can judge how convincing this explanation is. In our view, ANID’s central argument amounts to saying that belonging to the “Very Good” category means that any score within the 4.0–4.9 range is defensible. But that is precisely the point: recognizing a possible range does not explain the specific decision. What remains lacking is the traceability that connects, concretely and verifiably, the “gaps” mentioned with a particular value within the range, and especially with a score so close to the lower limit of the category. If the report itself describes a high performance with “minor” gaps, what identifiable element, and with what weight, justifies a score of 4.17 and not, for example, a 4.8 or a 4.9? As long as that connection is not precisely made explicit, the fundamental question remains open and the discrepancy between the tenor of the justification and the magnitude of the assigned score is not truly clarified.

The outcome of the competition, now consolidated following the response to the appeal, leaves Chile without CR2: a research center specifically dedicated to studying climate change and resilience against its impacts. This occurs in a country particularly vulnerable, where communities and ecosystems face increasingly frequent threats and extreme events such as heatwaves, fires, storms, and floods, and where the law mandates that adaptation to climate change be based on the best possible evidence.

None of what we’ve pointed out here seeks to question the quality or merit of the awarded proposals. All address highly relevant matters for national interest —adolescence, renewable energy, transportation, disasters, among others— and are backed by highly competent scientific teams. Our observation is systemic and refers to the aggregate result of the process: the awards culminated in an unusually high concentration across various dimensions. Seven centers were associated with a single main institution; four, within the same faculty; no center was awarded led by a public university; a large portion of the centers are focused outside of social sciences and humanities; and ten centers were established in the Metropolitan Region, among other patterns.

An outcome like this can occur in an open and competitive contest, especially when resources are scarce. However, given the magnitude of this instrument and its long-lasting implications for the national scientific system, a particularly robust, traceable, and verifiable justification was required, in line with a program designed to guide evidence-based public policies over the next decade. In our case, following the appeal, no explanation has been established that clarifies why the National Panel assigned a score that does not correlate with its own justification and that places CR2 just below the award threshold, nor why the institutional response does not substantively address the arguments presented. In a country that needs to strengthen its scientific capacity and its trust in institutions, decisions of this magnitude can only be sustained on public, clear, and verifiable reasons. That is the explanation that citizens —and the scientific system itself— have the right to know.

NOTE: This CR2 Analysis will be updated if new relevant information becomes available.

Figure 2. Competition results with detail of the National Panel score. The blue and light blue bars show the results of the scientific evaluation (stages 1 and 2, corresponding to 65% of the total score). The yellow triangles show the scores assigned by the National Panel, and the black lines, accompanied by a red or gray dot, correspond to the Final Score (after the National Panel evaluation) for selected and non-selected centers, respectively.